Image Processing

Over the centuries, human beings have developed numerous technologies for representing the visual world, the most prominent being sculpture, painting, photography, and motion pictures. The most recent form of this type of technology is digital image processing. This enormous field includes the principles and techniques for the following:

- The capture of images with devices such as flatbed scanners and digital cameras

- The representation and storage of images in efficient file formats

- Constructing the algorithms in image-manipulation programs such as Adobe Photoshop

In this section, we focus on some of the basic concepts and principles used to solve problems in image processing.

Analog and Digital Information

Representing photographic images in a computer poses an interesting problem.As you have seen, computers must use digital information which consists of discrete values, such as individual integers, characters of text, or bits in a bit string. However, the information contained in images, sound, and much of the rest of the physical world is analog. Analog information contains a continuous range of values. You can get an intuitive sense of what this means by contrasting the behaviors of a digital clock and a traditional analog clock. A digital clock shows each second as a discrete number on the display. An analog clock displays the seconds as tick marks on a circle. The clock’s second hand passes by these marks as it sweeps around the clock’s face. This sweep reveals the analog nature of time: between any two tick marks on the analog clock, there is a continuous range of positions or moments of time through which the second hand passes.You can represent these moments as fractions of a second, but between any two such moments are others that are more precise (recall the concept of precision used with real numbers). The ticks representing seconds on the analog clock’s face thus represent an attempt to sample moments of time as discrete values, whereas time itself is continuous, or analog.

Somehow, the continuous analog information in a real visual scene must be mapped into a set of discrete values. This conversion process involves sampling.

Sampling and Digitizing Images

A visual scene projects an infinite set of color and intensity values onto a two dimensional sensing medium, such as a human being’s retina or a scanner’s surface. If you sample enough of these values, the digital information can represent an image that is more or less indistinguishable to the human eye from the original scene.

Sampling devices measure discrete color values at distinct points on a twodimensional grid. These values are pixels, which were introduced earlier in this chapter. In theory, the more pixels that are sampled, the more continuous and realistic the resulting image will appear. In practice, however, the human eye cannot discern objects that are closer together than 0.1 mm, so a sampling of 10 pixels per linear millimeter (250 pixels per inch and 62,500 pixels per square inch) would be plenty accurate.

Thus, a 3-inch by 5-inch image would need 3 * 5 * 62,500 pixels/inch^2 = 937,500 pixels which is approximately one megapixel. For most purposes, however, you can settle for a much lower sampling size and, thus, fewer pixels per square inch.

Image File Formats

Once an image has been sampled, it can be stored in one of many file formats. A raw image file saves all of the sampled information. This has a cost and a benefit: the benefit is that the display of a raw image will be the most true to life, but the cost is that the file size of the image can be quite large.

Back in the days when disk storage was still expensive, computer scientists developed several schemes to compress the data of an image to minimize its file size. Although storage is now cheap, these formats are still quite economical for sending images across networks.Two of the most popular image file formats are JPEG (Joint Photographic Experts Group) and GIF (Graphic Interchange Format). Various data-compression schemes are used to reduce the file size of a JPEG image. One scheme examines the colors of each pixel’s neighbors in the grid. If any color values are the same, their positions rather than their values are stored,thus potentially saving many bits of storage. Before the image is displayed, the original color values are restored during the process of decompression. This scheme is called lossless compression, meaning that no information is lost. To save even more bits, another scheme analyzes larger regions of pixels and saves a color value that the pixels’ colors approximate. This is called a lossy scheme, meaning that some of the original color information is lost. However, when the image is decompressed and displayed, the human eye usually is not able to detect the difference between the new colors and the original ones.

A GIF image relies on an entirely different compression scheme. The compression algorithm consists of two phases. In the first phase, the algorithm analyzes the color samples to build a table, or color palette, of up to 256 of the most prevalent colors. The algorithm then visits each sample in the grid and replaces it with the key of the closest color in the color palette. The resulting image file thus consists of at most 256 color values and the integer keys of the image’s colors in the palette. This strategy can potentially save a huge number of bits of storage. The decompression algorithm uses the keys and the color palette to restore the grid of pixels for display. Although GIF uses a lossy compression scheme, it works very well for images with broad, flat areas of the same color, such as cartoons, backgrounds, and banners.

Image-Manipulation Operations

Image-manipulation programs either transform the information in the pixels or alter the arrangement of the pixels in the image. These programs also provide fairly low-level operations for transferring images to and from file storage.

Among other things, these programs can do the following:

- Rotate an image

- Convert an image from color to grayscale

- Apply color filtering to an image

- Highlight a particular area in an image

- Blur all or part of an image

- Sharpen all or part of an image

- Control the brightness of an image

- Perform edge detection on an image

- Enlarge or reduce an image’s size

- Apply color inversion to an image

- Morph an image into another image

The Properties of Images

When an image is loaded into a program such as a Web browser, the software maps the bits from the image file into a rectangular area of colored pixels for display.The coordinates of the pixels in this two-dimensional grid range from (0, 0) at the upper-left corner of an image to (width - 1, height - 1) at the lower-right corner, where width and height are the image’s dimensions in pixels. Thus, the screen coordinate system for the display of an image is somewhat different from the standard Cartesian coordinate system that we used with Turtle graphics, where the origin (0,0) is at the center of the rectangular grid.

The RGB color system is a common way of representing the colors in images. For our purposes, then, an image consists of a width, a height, and a set of color values accessible by means of (x, y) coordinates. A color value consists of the tuple (r, g, b), where the variables refer to the integer values of its red, green, and blue components, respectively.

The images Module

To facilitate our discussion of image-processing algorithms, we now present a small module of high-level Python resources for image processing. This package of resources, which is named images, allows the programmer to load an image from a file, view the image in a window, examine and manipulate an image’s RGB values, and save the image to a file.

Like turtlegraphics, the images module is a non-standard, open-source Python tool. Placing the file images.py and some sample image files in your current working directory will get you started. The images module includes a class named Image. The Image class represents an image as a two-dimensional grid of RGB values. The methods for the Image class are listed in Table . In this table, the variable i refers to an instance of the Image class.

This version of the images module accepts only image files in GIF format.

The following session with the interpreter does three things:

1 Imports the Image class from the images module

2 Instantiates this class using the file named smokey.gif

3 Draws the image

The resulting image display window is shown in Figure

Python raises an error if it cannot locate the file in the current directory, or if the file is not a GIF file. Note also that the user must close the window to return control to the caller of the method draw. If you are working in the shell, the shell prompt will reappear when you do this. The image can then be redrawn, after other operations are performed, by calling draw again.

Once an image has been created, you can examine its width and height, as follows:

>>>image.getWidth()

198

>>>image.getHeight()

149

>>>

Alternatively, you can print the image’s string representation:

>>>print image

Filename:smokey.gif

Width:198

Height:149

>>>

The method getPixel returns a tuple of the RGB values at the given coordinates.The following session shows the information for the pixel at position (0, 0), which is at the image’s upper-left corner.

>>>image.getPixel(0,0)

(194,221,114)

Instead of loading an existing image from a file, the programmer can create a new, blank image. The programmer specifies the image’s width and height; the resulting image consists of all white pixels. Such images are useful for creating backgrounds or drawing simple shapes, or creating new images that receive information from existing images. The programmer can use the method setPixel to replace an RGB value at a given position in an image. The next session creates a new 150 by 150 image.The pixels along a horizontal line at the middle of the image are then replaced with new blue pixels.

Finally, an image can be saved under its current filename or a different filename. The save operation is used to write an image back to an existing file using the current filename. The save operation can also receive a string parameter for a new filename. The image is written to a file with that name, which then

becomes the current filename. The following code saves the new image using the

filename horizontal.gif:

>>>image.save(“horizontal.gif”)

Many of the algorithms obtain a pixel from the image, apply some function to the pixel’s RGB values, and reset the pixel with the results. Because a pixel’s RGB values are stored in a tuple, manipulating them is quite easy. Python allows the assignment of one tuple to another in such a manner that the elements of the source tuple can be bound to distinct variables in the destination tuple. For example, suppose you want to increase each of a pixel’s RGB values by 10, thereby making the pixel brighter. You first call getPixel to retrieve a tuple and assign it to a tuple that contains three variables, as follows:

>>>(r,g,b)=image.getPixel(0,0)

>>>r

194

>>>g

221

>>>b

114

>>>image.setPixel(0,0,(r+10,g+10,b+10))

Converting an Image to Black and White( university question)

Perhaps the easiest transformation is to convert a color image to black and white.For each pixel, the algorithm computes the average of the red, green, and blue values. The algorithm then resets the pixel’s color values to 0 (black) if the average is closer to 0, or to 255 (white) if the average is closer to 255. The code for the function blackAndWhite follows.

from images import Image

# Code for black And White’s function definition goes here

def main(filename=“smokey.gif”):

image=Image(filename)

print “Close the image window to continue.“

image.draw()

blackAndWhite(image)

print "Close the image window to quit.“

image.draw()

main()

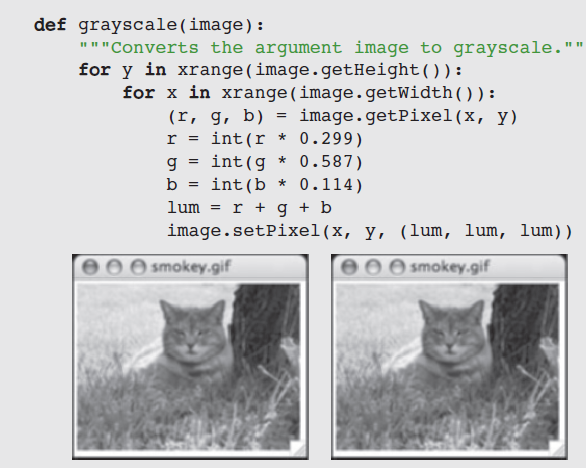

Converting an Image to Grayscale

Black and white photographs are not really just black and white, but also contain various shades of gray known as grayscale. Grayscale can be an economical color scheme, wherein the only color values might be 8, 16, or 256 shades of gray (including black and white at the extremes). Let’s consider how to convert a color image to grayscale.

The human eye is actually more sensitive to green and red than it is to blue. As a result, the blue component appears darker than the other two components. A scheme that combines the three components needs to take these differences in luminance into account. A more accurate method would weight green more than red and red more than blue. Therefore, to obtain the new RGB values, instead of adding up the color values and dividing by three, you should multiply each one by a weight factor and add the results. Psychologists have determined that the relative luminance proportions of green, red, and blue are .587, .299, and .114, respectively. Note that these values add up to 1. The next function, grayscale, uses this strategy, and Figure shows the results.

Copying an Image

The Image class includes a clone method for this purpose. The method clone builds and returns a new image with the same attributes as the original one, but with an empty string as the filename. The two images are thus structurally equivalent but not identical. This means that changes to the pixels in one image will have no impact on the pixels in the same positions in the other image. The following session demonstrates the use of the clone method:

Blurring an Image

Occasionally, an image appears to contain rough, jagged edges. This condition, known as pixilation, can be mitigated by blurring the image’s problem areas.Blurring makes these areas appear softer, but at the cost of losing some definition.We now develop a simple algorithm to blur an entire image. This algorithm resets each pixel’s color to the average of the colors of the four pixels that surround it.The function blur expects an image as an argument and returns a copy of that image with blurring. The function blur begins its traversal of the grid with position (1, 1) and ends with position (width - 2, height - 2). Although this means that the algorithm does not transform the pixels on the image’s outer edges, you do not have to check for the grid’s boundaries when you obtain information from a pixel’s neighbors. Here is the code for blur, followed by an explanation:

The code for blur includes some interesting design work. In the following explanation, the numbers noted appear to the right of the corresponding lines of code:

At #1, the nested auxiliary function tripleSum is defined. This function expects two tuples of integers as arguments and returns a single tuple containing the sums of the values at each position.

At #2, five tuples of RGB values are wrapped in a list and passed with the tripleSum function to the reduce function. This function repeatedly applies tripleSum to compute the sums of the tuples, until a single tuple containing the sums is returned.

At #3, a lambda function is mapped onto the tuple of sums and the resulting list is converted to a tuple. The lambda function divides each sum by 5. Thus, you are left with a tuple of the average RGB values.

Although this code is still rather complex, try writing it without map and reduce, and then compare the two versions.

Edge Detection

When artists paint pictures, they often sketch an outline of the subject in pencil or charcoal. They then fill in and color over the outline to complete the painting.Edge detection performs the inverse function on a color image: it removes the full colors to uncover the outlines of the objects represented in the image.

A simple edge-detection algorithm examines the neighbors below and to the left of each pixel in an image. If the luminance of the pixel differs from that of either of these two neighbors by a significant amount, you have detected an edge and you set that pixel’s color to black. Otherwise, you set the pixel’s color to white.

The function detectEdges expects an image and an integer as parameters. The function returns a new black-and-white image that explicitly shows the edges in the original image. The integer parameter allows the user to experiment with various differences in in luminance. Figure shows the image of Smokey the cat before and after detecting edges with luminance thresholds of 10 and 20. Here is the code for function detectEdges:

Edge detection: the original image, a luminance threshold of 10, and a luminance threshold of 20

Reducing the Image Size

The size and the quality of an image on a display medium, such as a computer monitor or a printed page, depend on two factors: the image’s width and height in pixels and the display medium’s resolution. Resolution is measured in pixels, or dots per inch (DPI). When the resolution of a monitor is increased, the images appear smaller but their quality increases. Conversely, when the resolution is decreased, images become larger but their quality degrades.

Some devices, such as printers, provide good-quality image displays with small DPIs such as 72, whereas monitors tend to give better results with higher DPIs. The resolution of an image itself can be set before the image is captured. Scanners and digital cameras have controls that allow the user to specify the DPI values. A higher DPI causes the sampling device to take more samples (pixels) through the two-dimensional grid.

lets learn how to reduce the size of an image once it has been captured.Reducing an image’s size can dramatically improve its performance characteristics, such as load time in a Web page and space occupied on a storage medium. In general, if the height and width of an image are each reduced by a factor of N, the number of color values in the resulting image is reduced by a factor of N^2.

A size reduction usually preserves an image’s aspect ratio (that is, the ratio of its width to its height). A simple way to shrink an image is to create a new image whose width and height are a constant fraction of the original image’s width and height. The algorithm then copies the color values of just some of the original image’s pixels to the new image. For example, to reduce the size of an image by a factor of 2, you could copy the color values from every other row and every other column of the original image to the new image.

The Python function shrink exploits this strategy. The function expects the original image and a positive integer shrinkage factor as parameters. A shrinkage factor of 2 tells Python to shrink the image to 1⁄2 of its original dimensions, a factor of 3 tells Python to shrink the image to 1⁄3 of its original dimensions, and so forth. The algorithm uses the shrinkage factor to compute the size of the new image and then creates it. Because a one-to-one mapping of grid positions in the two images is not possible, separate variables are used to track the positions of the pixels in the original image and the new image. The loop traverses the larger image (the original) and skips positions by incrementing its coordinates by the shrinkage factor. The new image’s coordinates are incremented by 1, as usual. The loop continuation conditions are also offset by the shrinkage factor to avoid range errors. Here is the code for the function shrink:

Reducing an image’s size throws away some of its pixel information. Indeed, the greater the reduction, the greater the information loss. However, as the image becomes smaller, the human eye does not normally notice the loss of visual information, and therefore the quality of the image remains stable to perception.

The results are quite different when an image is enlarged. To increase the size of an image, you have to add pixels that were not there to begin with. In this case, you try to approximate the color values that pixels would receive if you took another sample of the subject at a higher resolution. This process can be very complex, because you also have to transform the existing pixels to blend in with the new ones that are added. Because the image gets larger, the human eye is in a better position to notice any degradation of quality when comparing it to the original. The development of a simple enlargement algorithm is left as an exercise for you.

Images API- Programs

The images module includes a single class named Image. Each Image object represents an image. The programmer can supply the file name of an image on disk when Image is instantiated. The resulting Image object contains pixels loaded from an image file on disk. If a file name is not specified, a height and width must be. The resulting Image object contains the specified number of pixels with a single default color.

When the programmer imports the Image class and instantiates it, no window pops up. At that point, the programmer can run various methods with this Image object to access or modify its pixels, as well as save the image to a file. At any point in your code, you may run the draw method with an Image object. At this point, a window will pop up and display the image. The program will then wait for you to close the window before allowing you, either in the shell or in a script, to continue running more code.

The positions of pixels in an image are the same as screen coordinates for display in a window. That is, the origin (0, 0) is in the upper left corner of the image, and its (width, height) is in the lower right corner.

Images can be manipulated either interactively within a Python shell or from a Python script. Once again, we recommend that the shell or script by launched from a system terminal rather than from IDLE.

Unlike Turtle objects, Image objects cannot be viewed in multiple windows at the same time from the same script. If you want to view two or more Image objects simultaneously, you can create separate scripts for them and launch these in separate terminal windows.

There are two versions of the images module. One version, contained in the file images.py, supports the use of GIF files only, and works with Python 2 and 3. The other, contained in the file pilimages.py, supports the use of several common image file formats, such as GIF, JPEG, and PNG, and works with Python 2 only. Despite these differences, the Image class in both versions has the same interface. Here is a list of the

Image(filename) Loads an image from the file named fileName and returns an Image object that represents this image. The file must exist in the current working directory.

Image(width, height) Returns an Image object of the specified width and height with a single default color.

getWidth() Returns the width of the image in pixels.

getHeight() Returns the height of the image in pixels.

getPixel() Returns the pixel at the specified coordinates. A pixel is of the form (r, g, b), where the letters are integers representing the red, green, and blue values of a color in the RGB system.

setPixel(x, y, (r, g, b)) Resets the pixel at position (x, y) to the color value represented by (r, g, b). The coordinates must be in the range of the image’s coordinates and the RGB values must range from 0 through 255.

draw() Pops up a window and displays the image. The user must close the window to continue the program.

clone() Returns an Image object that is an exact copy of the original object.

save() Saves the image to its current file, if it has one. Otherwise, does nothing.

save(filename) Saves the image to the given file and makes it the current file. This is similar to the Save As option in most File menus.

Sample Programs( needs images module)

Read an image file(gif) and display

from images import Image

image = Image("smokey.gif")

image.draw()

Printing the image details

>>>image.getWidth()

513

>>> image.getHeight()

350

>>> print(image)

File name: smokey.gif

Width: 513

Height: 350

>>> image.getPixel(0,0)

(204, 213, 102)

Creating an image File

from images import Image

image = Image(150, 150)

image.draw()

blue = (0, 0, 255)

y = image.getHeight() // 2

for x in range(image.getWidth()):

image.setPixel(x, y - 1, blue)

image.setPixel(x, y, blue)

image.setPixel(x, y + 1, blue)

image.draw()

image.save("horizontal.gif")

Creating a red canvas

from images import Image

image = Image(150, 150)

for y in range(image.getHeight()):

for x in range(image.getWidth()):

image.setPixel(x, y, (255, 0, 0))

image.draw()

Converting color image into black and white image ( University Question)

from images import Image

def blackAndWhite(image):

"""Converts the argument image to black and white."""

blackPixel = (0, 0, 0)

whitePixel = (255, 255, 255)

for y in range(image.getHeight()):

for x in range(image.getWidth()):

(r, g, b) = image.getPixel(x, y)

average = (r + g + b) // 3

if average < 128:

image.setPixel(x, y, blackPixel)

else:

image.setPixel(x, y, whitePixel)

image=Image('smokey.gif')

print('Close the image to see the black and white image')

image.draw()

blackAndWhite(image)

image.draw()

Converting color image into gray scale image

from images import Image

def grayscale(image):

"""Converts the argument image to grayscale."""

for y in range(image.getHeight()):

for x in range(image.getWidth()):

(r, g, b) = image.getPixel(x, y)

r = int(r * 0.299)

g = int(g * 0.587)

b = int(b * 0.114)

lum = r + g + b

image.setPixel(x, y, (lum, lum, lum))

image=Image('smokey.gif')

print('Close the image to see the gray scale image')

image.draw()

grayscale(image)

image.draw()

Bluring an Image

from images import Image

from functools import *

def blur(image):

"""Builds and returns a new image which is a

blurred copy of the argument image."""

def tripleSum(triple1, triple2):

(r1, g1, b1) = triple1

(r2, g2, b2) = triple2

return (r1 + r2, g1 + g2, b1 + b2)

new = image.clone()

for y in range(1, image.getHeight() - 1):

for x in range(1, image.getWidth() - 1):

oldP = image.getPixel(x, y)

left = image.getPixel(x - 1, y) # To left

right = image.getPixel(x + 1, y) # To right

top = image.getPixel(x, y - 1) # Above

bottom = image.getPixel(x, y + 1) # Below

sums = reduce(tripleSum,[oldP, left, right, top, bottom])

averages = tuple(map(lambda x: x // 5, sums))

new.setPixel(x, y, averages)

return new

image=Image('smokey.gif')

print('Close the image to see the blured image')

image.draw()

blur(image)

image.draw()

The reduce(fun,seq) function is used to apply a particular function passed in its argument to all of the list elements mentioned in the sequence passed along.This function is defined in “functools” module.

Working :

At first step, first two elements of sequence are picked and the result is obtained.

Next step is to apply the same function to the previously attained result and the number just succeeding the second element and the result is again stored.

This process continues till no more elements are left in the container.

The final returned result is returned and printed on console.

Edge Detection

from images import Image

def detectEdges(image, amount):

"""Builds and returns a new image in which the edges of

the argument image are highlighted and the colors are

reduced to black and white."""

def average(triple):

(r, g, b) = triple

return (r + g + b) // 3

blackPixel = (0, 0, 0)

whitePixel = (255, 255, 255)

new = image.clone()

for y in range(image.getHeight() - 1):

for x in range(1, image.getWidth()):

oldPixel = image.getPixel(x, y)

leftPixel = image.getPixel(x - 1, y)

bottomPixel = image.getPixel(x, y + 1)

oldLum = average(oldPixel)

leftLum = average(leftPixel)

bottomLum = average(bottomPixel)

if abs(oldLum - leftLum) > amount or \

abs(oldLum - bottomLum) > amount:

new.setPixel(x, y, blackPixel)

else:

new.setPixel(x, y, whitePixel)

return new

image=Image('smokey.gif')

print('Close the image to see the edges')

image.draw()

image=detectEdges(image,35)

image.draw()

Shrinking Images ( University Question)

from images import Image

def shrink(image, factor):

"""Builds and returns a new image which is a smaller

copy of the argument image, by the factor argument."""

width = image.getWidth()

height = image.getHeight()

new = Image(width // factor, height // factor)

oldY = 0

newY = 0

while oldY < height - factor:

oldX = 0

newX = 0

while oldX < width - factor:

oldP = image.getPixel(oldX, oldY)

new.setPixel(newX, newY, oldP)

oldX += factor

newX += 1

oldY += factor

newY += 1

return new

image=Image('smokey.gif')

print('Close the image to see the shrnked image')

image.draw()

image=shrink(image,2)

image.draw()

Python Imaging Library (PIL)

The Python Imaging Library (PIL), now maintained under the name Pillow, is a powerful library for image processing in Python. You can use it to read, manipulate, and display images.

If you haven't already installed Pillow, you can install it using pip:

pip install pillow

pip install pillow

Here’s how you can use Pillow to read and display an image:

from PIL import Image

# Step 1: Open an image file

image_path = "your_image.jpg" # Replace with the path to your image

image = Image.open(image_path)

# Step 2: Display the image

image.show()

Additional Information

Supported Formats: Pillow supports a wide range of image formats, including JPEG, PNG, BMP, GIF, and TIFF.

Image Properties: You can access image properties like size, format, and mode:

Example:

from PIL import Image

# Open an image

image = Image.open("your_image.jpg")

# Display image properties

print(f"Size: {image.size}")

print(f"Format: {image.format}")

print(f"Mode: {image.mode}")

# Display the image

image.show()

Convert a Color Image to grayscale

To convert a color image to black and white (grayscale) using the Pillow library (PIL) in Python, you can use the .convert() method with the mode 'L'. The 'L' mode stands for luminance, which converts the image to grayscale.

from PIL import Image

# Open a color image

image_path = "your_image.jpg" # Replace with the path to your image

image = Image.open(image_path)

# Convert the image to grayscale

grayscale_image = image.convert("L")

# Save the grayscale image (optional)

grayscale_image.save("grayscale_image.jpg")

# Display the grayscale image

grayscale_image.show()

If you want to convert an image to pure black and white (not grayscale), you need to perform thresholding. This means setting a threshold value, and converting all pixels above that value to white (255) and all pixels below that value to black (0). This is often referred to as a binary image.

Here’s how you can do it using the Pillow library (PIL) in Python:

from PIL import Image

# Open a color image

image_path = "your_image.jpg" # Replace with the path to your image

image = Image.open(image_path)

# Convert the image to grayscale

grayscale_image = image.convert("L")

# Define a threshold value (0-255)

threshold = 128 # You can adjust this value

# Apply thresholding to convert to pure black and white

bw_image = grayscale_image.point(lambda p: 255 if p > threshold else 0)

# Save the black and white image (optional)

bw_image.save("black_white_image.jpg")

# Display the black and white image

bw_image.show()

Accessing pixel values using the load method

from PIL import Image

# Open a grayscale image

image_path = "your_image.jpg" # Replace with the path to your image

image = Image.open(image_path).convert("L") # Ensure it's in grayscale mode

# Load the pixel data

pixels = image.load()

# Get image dimensions

width, height = image.size

# Iterate over each pixel

for y in range(height):

for x in range(width):

intensity = pixels[x, y] # Get the intensity value at (x, y)

print(f"Pixel at ({x}, {y}): Intensity={intensity}")

Comments

Post a Comment